Category: #PrivacyCamp22

-

#PrivacyCamp23: Event Summary

In January 2023, EDRi gathered policymakers, activists, human rights defenders, climate and social justice advocates and academics in Brussels to discuss the criticality of our digital worlds. We welcomed 200+ participants in person and enjoyed an online audience of 600+ people engaging with the event livestream videos. If you missed the event or want a…

-

#PrivacyCamp22: Event Summary

The theme of the 10th-anniversary edition of Privacy Camp was “Digital at the centre, rights at the margins” and included thirteen sessions on a variety of topics. The event was attended by 300 people. If you missed the event or want a reminder of what happened in a session, find the session summaries and video…

-

#PrivacyCamp22: Livestream

Parallel sessions at the event will take place in 2 rooms: Alice & Bob. This year, the Privacy Camp conference will also be live-streamed. So, in case you did not register or you struggle to connect to the room, you can follow the livestream here. The sessions will be recorded and shared following the event.…

-

#PrivacyCamp22: Final Schedule

The final programme is here! Find the full schedule of sessions and speakers for the 10th anniversary edition of Privacy Camp on 25 January 2022. View/download the complete schedule in print format here (pdf). Digital at the centre, rights at the margins? After 10 successful editions and serving as the flagship annual event for digital…

-

#PrivacyCamp22: Draft programme schedule

Digital at the centre, rights at the margins? Privacy Camp turns 10 in 2022 and it’s time to celebrate! The special anniversary edition of Privacy Camp 2022 will be the occasion to reflect on a decade of digital activism, and to think together about the best ways to advance human rights in the digital age.…

-

#PrivacyCamp22: Registration opening soon

Watch this space! Registration to #PrivacyCamp22 will open soon.

-

#PrivacyCamp22: Call for panels! (New deadline)

** UPDATE: The deadline is extended to 14 November 2021, 23:59 CEST. Privacy Camp turns 10. It is time to celebrate. But Privacy Camp 2022 is also the occasion to reflect on a decade of digital activism, and to think together about the best ways to advance human rights in the digital age. The 10th…

-

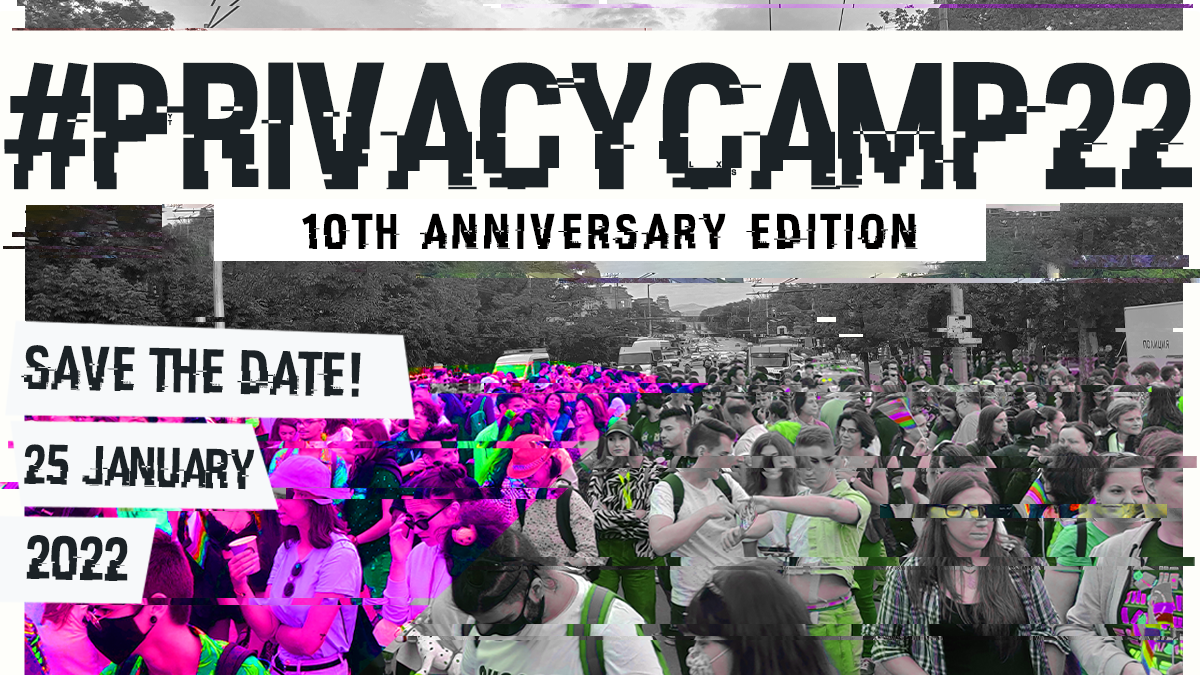

#PrivacyCamp22: Save the date!

We are proud to present the 10th annual Privacy Camp! Join us online on 25 January 2022!